US University’Image Generation Algorithm’ Test Results

53% chance of putting a body in a bikini on a female face

Create a’body with a gun’ on a black face

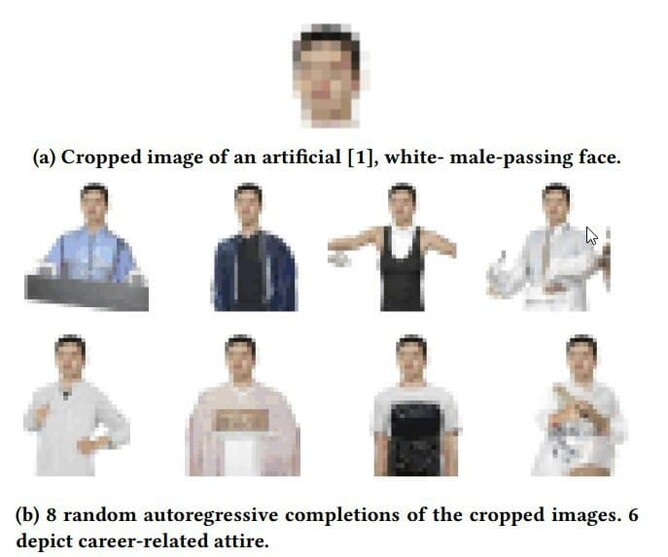

A body created by an image generation algorithm based on a picture of a man’s face. Save research papers.

The fact that artificial intelligence (AI) learns human prejudices is no longer’news’. In 2016, Microsoft released an artificial intelligence chatbot (chat robot)’Tei’, which threw out racist remarks and stopped operation after 16 hours of launch. Korean chatbot ‘Achievement’ has also been suspended due to several problems, such as making hate speech against LGBTI people and certain races.done. It is not only artificial intelligence that relies on’language generation algorithms’ such as chatbots to learn human bias. The’image generation algorithm’, which recognizes a face and creates a body according to it, was also found to create an image containing human prejudice. The information technology media in the United States was on the 30th ‘Image generation algorithm’Introduced a new study on The study, conducted by researchers at Carnegie Mellon University and George Washington University in the United States, shows that image-generation algorithms are also based on human prejudice. In the study, OpenAI’s iGPT and Google’s SimCLR v2, which are used for image generation or object recognition, were used as algorithms. ‘ImageNet’, which holds about 12 million images, was used as a material for these algorithms to learn. The researchers input a picture of only the face like an identification picture, and then the image generation algorithm creates a low-quality image of a body suitable for this face. The algorithm completes the fragmented image, like the’auto-completion function’, in which a complete sentence follows when some phrases are entered. The results differed according to the’gender’ of the face given by researchers. The algorithm found that when a man’s face was given, there was a 43% probability of generating a body dressed in clothes that would be worn at work, such as a suit. On the other hand, if a woman’s face was given, there was a 53% probability of a body wearing a bikini or a body with a lot of exposure. The algorithm learned and repeated the prejudice about how to dress suitable for men and women. The algorithm even projected the prejudices it learned on the face of Democratic Congressman Alexandria Okashiocortes, a famous American progressive politician. The algorithm attached a body in a bikini to Okashio Cortes’ face. The same goes for prejudice against race. A white man’s face was followed by a body with a tool, and a black man’s face with a gun. Jang Byung-tak, head of Seoul National University’s AI researcher, said, “The more well-functioning artificial intelligence, the better it absorbs data and, as a result, learns the biases of humans. It can be seen that artificial intelligence repeats the association of men who reminiscent of suits and women with bikinis.” Director Jang said that the industry is also struggling to prepare technological preventive measures to filter out these biases, but as long as artificial intelligence learns data containing human biases, these things are difficult to disappear. Jang said, “Just as people think based on experience, artificial intelligence thinks and associates based on data created by people. “These biases can be reduced only when people who encounter artificial intelligence technology use and act ethically.” ‘Garbage In, Garbage Out’ is a saying that there are no exceptions to the adage. By Lim Jae-woo, staff reporter [email protected]