Corona 19 came like a lightning bolt. And it has changed a lot. One of them is the’popularization of the 4th industrial revolution’. It is true that the Fourth Industrial Revolution has been felt only as some declarative slogan. However, after Corona 19, its perception has changed significantly. I realized that the Fourth Industrial Revolution is not just a slogan, but a matter of survival. GDNET Korea looks forward to the evolutionary direction of the 4th industrial revolution with 10 keywords in the new year of the new year (辛丑年).[편집자주]

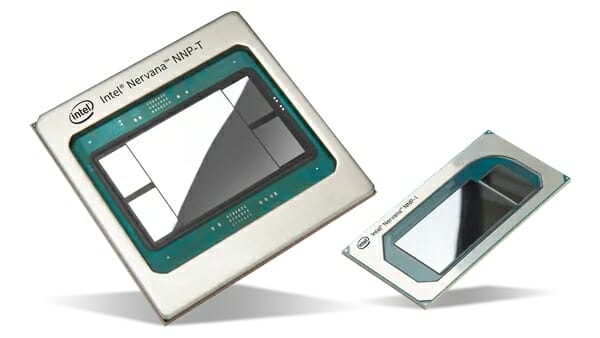

② Next-generation semiconductor: AI brain, neural network accelerator chip is on the rise

In order to open the era of artificial intelligence (AI), which is considered the core technology of the 4th industrial revolution, semiconductors specialized in AI processing such as deep learning and inference, such as neural network acceleration chips, are essential.

The neural network accelerator chip consumes less power than the existing CPU and can perform calculations at a much faster speed, attracting attention not only in PCs and smartphones, but also in all fields that require AI processing.

In the new year of 2021, the competition for global companies’ R&D and market preoccupation is expected to accelerate. Not only global companies and fabless companies with strengths in system semiconductor (SoC), but also existing semiconductor companies are expanding investments in neural network accelerator chips to strengthen their non-memory semiconductor portfolio.

According to market research firms such as Report Linker, the neural network accelerator chip market that performs deep learning is expected to reach US$ 24.5 billion (about 36 trillion won) in 2025. The annual market growth rate is also very steep at 37%.

■ Complementing the existing processor to accelerate AI computation

Originally, neural network accelerator chips did not have the ability to think for themselves as people often think. However, complex operations that had to be processed through several steps can be processed with a single instruction, or operations specialized for data required for AI processing are performed much faster.

Neural network accelerator chips can be roughly divided into two types. First, it is a chip that accelerates various operations for AI implementation such as deep learning and inference. It can reduce power consumption and processing time by processing data with different number of bits or by dedicated operations such as matrix multiplication and addition.

Next, there are chips that not only accelerate the computations required by AI, but go further and work in a similar way by mimicking the human brain cells themselves. Some products, such as IBM TrueNorth and Intel Loihi, are available, but commercially available products using them are still in hand.

■ Next-generation intelligent semiconductor project team launched…a total of 1 trillion won in 2029

After the global memory semiconductor market’s supercycle (2017-2018), the government started to foster system semiconductors (SoCs) from 2019 in order to strengthen the competitiveness of non-memory semiconductors as a crisis came due to a drop in unit prices due to oversupply.

The movement toward policy support at the national level is also accelerating. In April 2019, through the announcement of’System Semiconductor Vision and Strategy’, the company unveiled its vision to leap from a memory semiconductor powerhouse to a comprehensive semiconductor powerhouse.

Following that, the Ministry of Science, ICT and Telecommunications and the Ministry of Trade, Industry and Energy launched the next-generation intelligent semiconductor business group in September last year and are promoting the development of AI-related semiconductor technology by investing a total of KRW 1,9.6 billion by 2029.

At the corporate level, AI semiconductor demand companies such as Hyundai Mobis, Samchully, SK Telecom, and Hanwha Techwin, and domestic fabless companies such as Telechips and Skychips, which develop them, and Samsung Electronics, SK Hynix, and DB Hi-Tek that support them, verify the concept, develop, and pre-production. We are working closely with each step to reduce trial and error.

Last year, 103 companies, 32 universities, and 12 research institutes participated in a total of 82 related projects. In addition, a total of 6 billion won is expected to be invested in securing the original technology necessary for neural network acceleration chips such as ultra-high-speed and low-power memory, neural network hardware, and brain simulation processor for about three years from this year to 2023.

■ Neural network accelerator chip grows to 36 trillion won in 2025

The biggest advantage of the neural network accelerator chip is that it can process AI processing, which had to rely on cloud computing in the past, at the edge. The delay that occurs in the process of transmitting the data inside the device to the cloud and receiving the result value again disappears.

With the spread of 5G, the delay time in data transmission is dramatically decreasing, but the possibility of data leakage in fields such as defense and medical care cannot be ruled out. In addition, neural network accelerator chips are of great importance in fields that require real-time processing such as voice recognition and autonomous driving.

Most smartphones released last year have built-in neural network acceleration chip blocks inside APs (application processors). The neural network accelerator chip is expected to be installed in all devices requiring AI-related processing, such as industrial robots and home appliances, as well as computing-related devices.

According to market research firms such as Report Linker, the neural network accelerator chip market that performs deep learning is expected to reach $24.5 billion (about 36 trillion won) in 2025.

This year’s market size varies from $3.9 billion (based on Tractica) to $9.7 billion (based on Gartner), depending on each company. However, it is a common opinion that scale down is not practical in the market situation where effective AI processing is required.

■ Global AP and processor companies compete

Most of the neural network accelerator chips on the market today are not independent, but are embedded in the AP or PC/server processor in a block form.

Apple has included a “neural engine” since 2017, and Qualcomm has built a Hexagon processor that accelerates AI processing in the Snapdragon 888 AP, which was unveiled in December.

Samsung Electronics is also spurring NPU development under the’System Semiconductor 2030 Vision’. It is intended to strengthen the competitiveness of Exynos, an AP for smartphones, while at the same time breaking the portfolio focused on memory semiconductors such as DRAM and NAND flash.

The Exynos 990 AP installed in the Galaxy Note 20 released last year was equipped with two self-developed NPUs and a DSP to enhance AI computation performance.

Currently, Samsung Electronics has not released an independent neural network accelerator chip like a major global semiconductor company. However, according to related industries, it is estimated that Samsung Electronics possesses a number of neural network acceleration chips and related technologies that can be installed in a wide range of products such as home appliances as well as mobile products.

Related Articles

Nvidia acquires ARM… AI and graphics chip powerhouse

Samsung accelerates’AI semiconductor’ development…

The strategy that ARM has found for’promoting Korean system semiconductors’ is

Samsung fosters’NPU’ as the core of’Semiconductor Vision 2030′

In 2017, Intel developed the Loihi chip, a chip that mimics human brain cells. Intel explains that it consumes 1/45 of power compared to existing processors, but the processing speed is more than 100 times. Recently, researchers at the National University of Singapore succeeded in creating a tactile robot using Roihi chips.

The Trunos chip developed by IBM suppresses power consumption up to 200mW and can perform synaptic operations that run 4.6 billion times per second. Taking advantage of these characteristics, the US Air Force is conducting research to install Trunos chips on unmanned aerial vehicles (drones) within the next five years.