ScatterLab, a developer of artificial intelligence (AI) chatbot’Iruda’, argued that the use of KakaoTalk conversations between couples collected in another service’Science of Love’ in the development of chatbots was within the scope of the privacy policy.

Also, while using KakaoTalk conversation as a material, the address, account number, phone number, and real name are deleted and made into individual sentences.In the process, we apologize for the fact that filtering may not be performed properly, so that person names and addresses may remain. did.

In this controversy, the Personal Information Protection Committee also launched an investigation to determine the facts of the leakage of Scatter Lab personal information.

On the 12th, Scatter Lab revealed its position on whether to comply with the privacy policy and plans to supplement sexual harassment remarks through a question-and-answer e-mail.

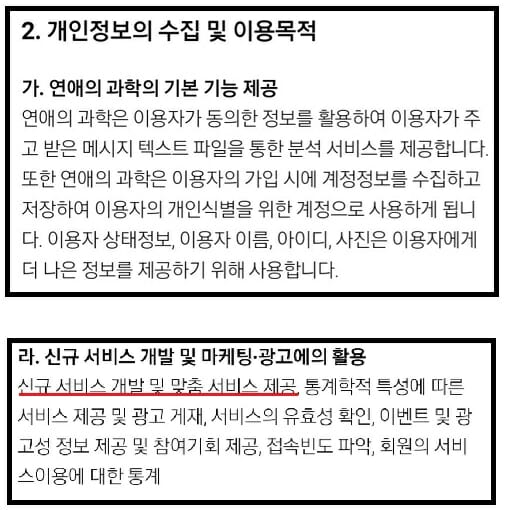

The company said, “The science user data of love was used within the scope of the privacy policy that the user’s prior consent was granted.” Among the science users of dating, those who do not want the data to be used for AI learning will be deleted and We plan to take additional measures so that it is not used in Iruda’s DB.”

In addition, regarding the exposure of personal information such as addresses, “It is difficult for a person to individually inspect 100 million individual sentences, so it was mechanically filtered through an algorithm. In this process, we tried to give as many variables as possible, but the name of the person was changed according to the context. “There were some remaining parts,” he said. “We apologize for not paying more attention to the matter and for the appearance of the person’s name. However, we apologize that the name information in the sentence is not used in combination with other information. “

ScatterLab plans to upgrade related algorithms to compensate for the lack of filtering such as specific names and addresses. Since the company was scheduled to relearn Eruda quarterly, no further updates have been made to Eruda, which has been released for about three weeks.

Scatter Lab pretrained KakaoTalk conversation information, the science of love, and is using a database of 100 million conversation sentences. In the data used, personal information such as the talker’s name has been deleted, and the talker’s information can only be recognized by gender and age. In the pre-training stage, AI learns only the correlation between the context and answers that exist in the conversation between people.

“Iruda is influenced by the context of the previous conversation, and the answer is selected among individual sentences. At this time, the context of the conversation, including the expression, mood, and tone that the user used in the conversation in the past 10 turns, is greatly affected.” “This allows users to feel that they are giving individualized answers in the process.”

In addition, he explained that the reason why he made sexual harassment and hate speech during the achievement was still in the early stages of the service, and it was not possible to catch it during the beta test.

Related Articles

Personal Information Protection Commission initiates investigation of personal information leakage of AI bot’Iruda’

AI chatbot’achieved’ temporarily suspended… “Sorry for not notifying the use of KakaoTalk conversation”

The Korea Artificial Intelligence Ethics Association “I need to re-release”

Chatbot’Achieved’ praised by those who used it first

ScatterLab said, “(Last year), while the beta test was conducted for about 2,000 users, 800,000 users flocked to the achievement after the official launch, and since the actual service launch, it is wider and more diverse than we prepared in advance. Serious user utterances appeared,” he said. “As a result, Iruda’s unexpected sexual or biased conversations emerged, and after launching the service, I felt deeply aware that there was a lack of coping with it.”

He added, “Iruda will improve to become an AI that contains universal values in society by introducing and learning more stringent labeling standards for incomplete data while talking with users this time.”